Issue #9101

closedContent_artifact is not updated

Description

When content is created with "on_demand" policy (only remote artifacts) and then is the same content synced with 'immediate' policy (artifact downloaded) content_artifact.artifact of that content is not updated.

Looks QueryExistingContent stage should check this update.

Reproducer script:

#!/usr/bin/env bash

export REPO1=$(head /dev/urandom | tr -dc a-z | head -c5)

export REPO2=$(head /dev/urandom | tr -dc a-z | head -c5)

export REMOTE1=$(head /dev/urandom | tr -dc a-z | head -c5)

export REMOTE2=$(head /dev/urandom | tr -dc a-z | head -c5)

# sync content on_demand

pulp file repository create --name $REPO1

pulp file remote create --name $REMOTE1 \

--url 'https://fixtures.pulpproject.org/file/PULP_MANIFEST' \

--policy 'on_demand'

pulp file repository sync --name $REPO1 --remote $REMOTE1

export ADDED=$(pulp file repository version show --repository $REPO1 --version 1 | \

jq -r '.content_summary | .added | ."file.file" | .href')

http :24817${ADDED}

echo "here are no checksums as only remote artifacts are present"

echo "press [enter] to continue"

read

# sync same content immediate

pulp file repository create --name $REPO2

pulp file remote create --name $REMOTE2 \

--url 'https://fixtures.pulpproject.org/file/PULP_MANIFEST' \

--policy 'immediate'

pulp file repository sync --name $REPO2 --remote $REMOTE2

export ADDED=$(pulp file repository version show --repository $REPO2 --version 1 | \

jq -r '.content_summary | .added | ."file.file" | .href')

http :24817${ADDED}

echo "as artifacts downloaded checksums should be visible "

echo "if you check in django shell or db, content_artifacts does not have updated 'artifact' relation"

Files

Related issues

Updated by dkliban@redhat.com over 3 years ago

Updated by dkliban@redhat.com over 3 years ago

- Priority changed from Normal to High

- Severity changed from 2. Medium to 3. High

- Triaged changed from No to Yes

- Sprint set to Sprint 101

Updated by dkliban@redhat.com over 3 years ago

Updated by dkliban@redhat.com over 3 years ago

- Priority changed from High to Normal

- Severity changed from 3. High to 2. Medium

Updated by ipanova@redhat.com over 3 years ago

Updated by ipanova@redhat.com over 3 years ago

- Sprint changed from Sprint 101 to Sprint 102

Updated by lmjachky over 3 years ago

Updated by lmjachky over 3 years ago

- Status changed from NEW to ASSIGNED

- Assignee set to lmjachky

Updated by dalley over 3 years ago

Updated by dalley over 3 years ago

- Related to Issue #8305: Deleting a remote used as source for live content corrupts ContentArtifact records added

Updated by lmjachky over 3 years ago

Updated by lmjachky over 3 years ago

(1) One of the possible solutions is to update the corresponding reference (i.e., artifact) for ContentArtifact objects in the ContentSaver stage. This stage is responsible for saving Content and it assumes that once content is saved, it does not need to create/update the content again. I am attaching a patch that resolves the reported issue. Yet, I will think about a better approach because the attached patch causes django to invoke a database call for every content unit when it was already saved, which can result in performance degradation.

(2) Maybe replacing the call ContentArtifact.objects.bulk_get_or_create(content_artifact_bulk) with ContentArtifact.objects.bulk_create_or_update(content_artifact_bulk) could be a better solution after making this code to run even when content_already_saved == False?

(3) Another solution would be to add another stage (e.g., ContentUpdater) that will go through saved content and will check whether DeclarativeContent objects are correctly mapped to the database (i.e., ContentArtifact). Then, it will call bulk_update on the content that needs to be updated. This is only redemption after ignoring the issue in stages which should handle this in the first place.

Updated by gerrod over 3 years ago

Updated by gerrod over 3 years ago

- File ca_bulk_update.patch ca_bulk_update.patch added

After looking over your suggestions I came up with this patch to do all the work in (hopefully) two db calls. I did basic checks to make sure it fixes the problem, but I haven't check if it covers all edge cases and if it only does two db calls.

Updated by lmjachky over 3 years ago

Updated by lmjachky over 3 years ago

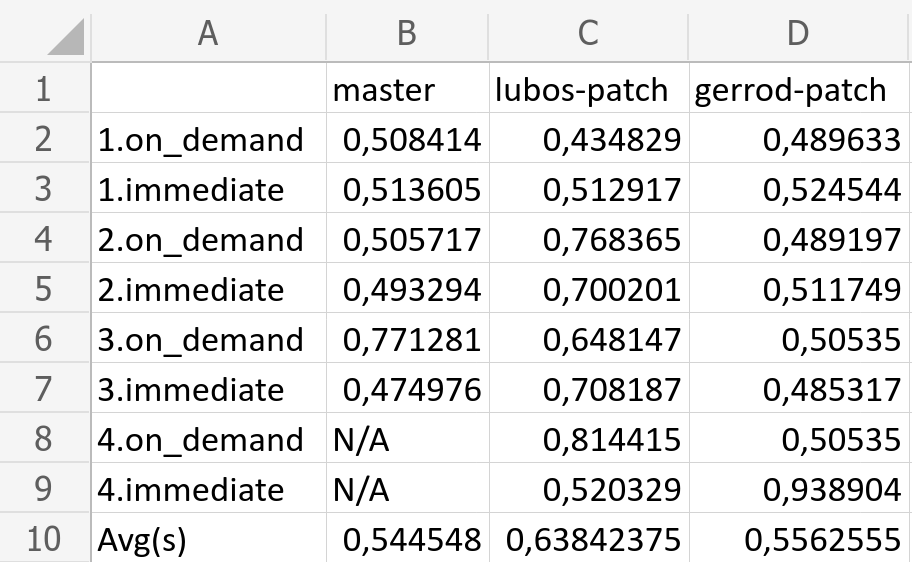

I performed a couple of benchmark tests to see if Gerrod's patch does not decrease the performance (since filter() is called n-times; similarly to my update() call). I run the attached Pavel's script on a repository with 5,000 files multiple times in a row (this means that only the first 2 syncs were really creating new content; the rest of them were checking the existing content). I enabled profiling and logged service time average for the ContentSaver stage.

In comparison to my patch, the Gerrod's patch almost did not increased the time spent in the stage when content already existed in the database. Therefore, I conclude that we should follow the approach Gerrod has presented.

Updated by daviddavis over 3 years ago

Updated by daviddavis over 3 years ago

- Priority changed from Normal to High

I just ran into this with Satellite QE. The bad part is that Content gets stuck without artifact--there's no way to fix the problem other than to delete the Content and start over. I think this is warrants higher priority.

Updated by dalley over 3 years ago

Updated by dalley over 3 years ago

lmjachky Is the attached patch ready to go, then? Could a PR be created prior to Monday / Tuesday, so that this BZ can be addressed?

Updated by dalley over 3 years ago

Updated by dalley over 3 years ago

- Status changed from ASSIGNED to POST

- Assignee changed from lmjachky to gerrod

- Sprint/Milestone set to 3.15.0

Updated by dalley over 3 years ago

Updated by dalley over 3 years ago

- Copied to Backport #9261: Backport #9101 "Content_artifact is not updated" to 3.14.z added

Updated by pulpbot over 3 years ago

Updated by pulpbot over 3 years ago

Added by gerrod about 3 years ago

Added by gerrod about 3 years ago

Updated by gerrod about 3 years ago

Updated by gerrod about 3 years ago

- Status changed from POST to MODIFIED

Applied in changeset pulpcore|c2b732e55174e022d4f3e2a51d601b0ae71732aa.

Updated by pulpbot about 3 years ago

Updated by pulpbot about 3 years ago

- Status changed from MODIFIED to CLOSED - CURRENTRELEASE

Properly update present ContentAtifacts after immediate sync

fixes: #9101